Integrative Relational Learning on Multimodal Oncology Data

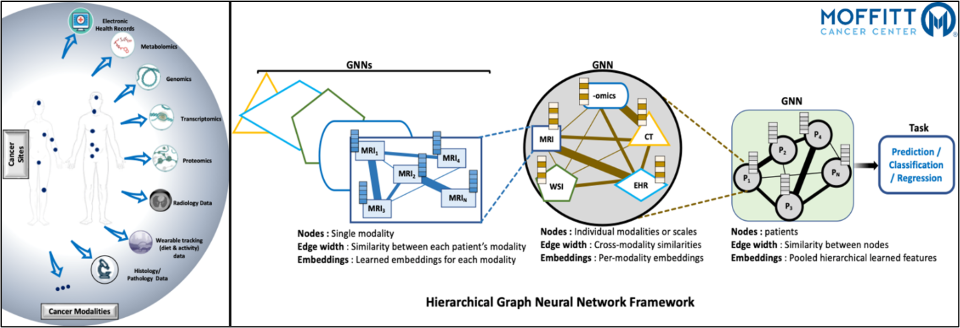

Integrative Relational Learning (IRL) is a method of unified learning from multiple (same or different) data sources in order to explore and identify relationships and patterns otherwise unobserved in the data. When applied to oncology data, IRL combines and analyzes the cancer data at varying levels of granularity, resolution, and modalities to gain a more comprehensive understanding of the disease and improve patient outcomes. Such data includes multi-omics such as genomics, proteomics, and metabolomics, clinical data such as electronic health records, and imaging data such as pathology and radiology scans. Graph neural networks (GNNs) are a class of artificial neural networks that can be used to process multimodal oncology data. GNNs are the most general form of neural networks that are specifically designed to process data that is represented as a graph, making them most appropriate for analyzing complex and connected multimodal oncology data. GNNs have been extensively used for disease diagnosis and prognosis, patient survival and stratification, drug-patient suitability, etc. However, given the complexity of the oncology domain, developing and fine-tuning GNN models for multimodal oncology data are still an active area of research. In this project, we are working on GNN-based hierarchical relational model that can efficiently learn from multi-modal, heterogeneous datasets for improved prediction of clinical outcomes. Our framework (1) generates graphs from heterogeneous, multi- resolution datasets, (2) learns and improves relational embeddings at various stages of the data hierarchy using GNNs, (3) fuses the learned embeddings into a unified graph representation, and (4) provides improved performance metrics for downstream tasks such as survival analysis, recurrence prediction, and distant metastasis occurrence. The solution fuses unobserved but interrelated cancer variables in non-Euclidean space through a scalable framework providing insights into the underlying biology of cancer, identifying new therapeutic targets, and improving the accuracy of diagnosis and prognosis.

Multimodal Hierarchical Transformers

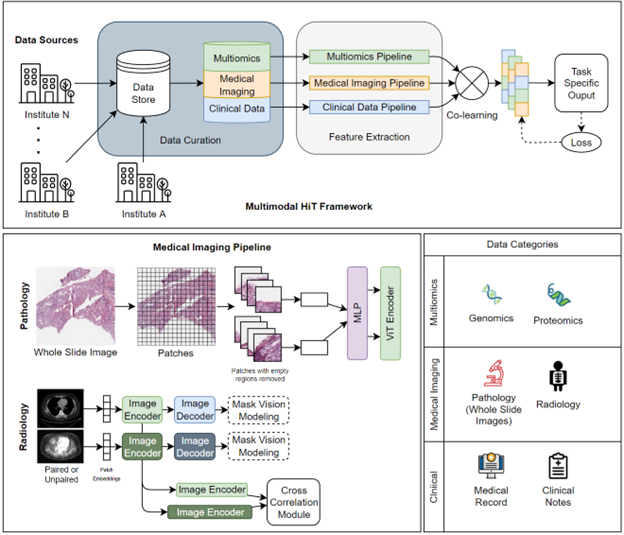

Multimodal Hierarchical Transformers in Oncology is a research area that leverages machine learning and natural language processing to improve cancer diagnosis and treatment. Our research aims to develop a deep learning model that can integrate and analyze multiple types of data, such as imaging, genetic, and clinical information, to provide more accurate and personalized cancer diagnosis and treatment recommendations. To address the challenge of analyzing and interpreting multimodal data, we propose a multimodal hierarchical transformer model that utilizes a transformer architecture. The model is composed of multiple layers, each of which is designed to capture different levels of representations of the data. The lower layers are responsible for learning the basic features of the data, such as image segments or genetic sequences, while the upper layers learn the relationships between different data modalities. This hierarchical structure allows the model to learn a more comprehensive representation of the data. The process of the model is illustrated in the figure, which shows the data flow from different modalities through the transformer layers. The data is first preprocessed to extract the essential features. Then, the lower layers are responsible for learning the basic features of the data, such as image segments or genetic sequences. The upper layers, on the other hand, learn the relationships between different data modalities. The final output of the model is a prediction of the diagnosis and treatment plan. The model is trained using a combination of supervised and unsupervised learning, which allows it to learn from both labeled and unlabeled data. This enables the model to learn from a larger heterogenous dataset, which increases its ability to generalize to new cases.

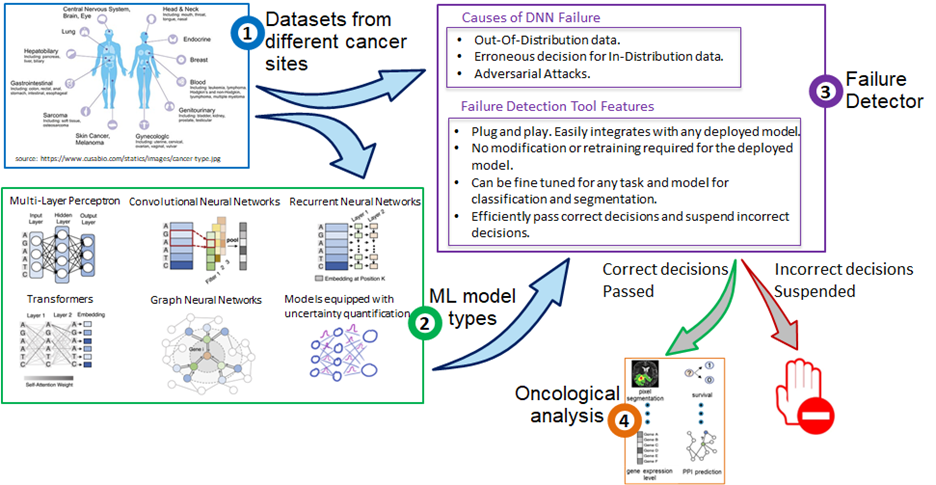

Failure Detection Tool for Trustworthy models

Trustworthiness and reliability are imperative for realizing the practical use of deep neural networks (DNNs) in the modern healthcare system. With DNNs supporting health practitioners in diagnosis, prognosis, treatment planning of patients, it is essential to have safety mechanisms in place to detect and alert users of the failure and performance degradation of the deployed DNNs. A model deployed in live settings may fail when the input is out-of-distribution or noisy due to a change in settings/upgrade of the medical software. Another cause of failure can be adversarial attacks that tend to deceive the model into making incorrect decisions by modifying the input that may be imperceptible to the human eye. In live settings, there is no ground truth making it pertinent to have a solution for detecting incorrect decisions and suspending these to be reviewed by a health practitioner. A practical approach for enhancing reliability of DNNs in live settings is a plug-and-play solution that can seamlessly integrate with any deployed classification/segmentation model. In our proposed solution, there is no requirement for retraining in case of an already deployed model. The Failure Detection Tool is fine-tuned for the task at hand and works for classification and segmentation tasks. This tool can efficiently segregate the erroneous decisions made by the model and suspend these. Resultantly, only correct decisions are made to pass. The aim of this project is to improve the trustworthiness of DNNs and enhance user confidence in model predictions.

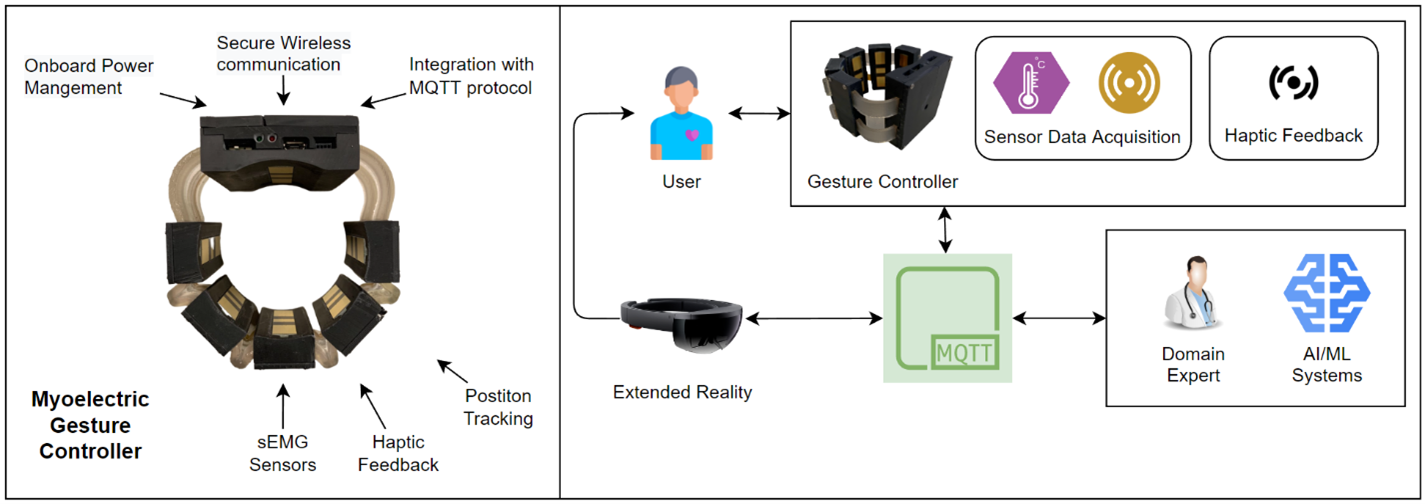

Patient Monitoring with Extended Reality and Internet of Medical Things

Our Internet of Medical Things (IoMT) wearable device (Myoelectric Gesture Controller) is a sophisticated and advanced piece of technology that utilizes sEMG and IMU sensors to record data, provides haptic feedback, and uses MQTT protocol for secure wireless communication. The device can be worn on the body and records data from the sEMG (surface electromyography) and IMU (inertial measurement unit) sensors. The sEMG sensors detect the electrical activity generated by muscle contractions and can be used to measure muscle activity or muscle fatigue. The IMU sensors measure the device's orientation and movement in 3D space, providing information about the user's movements and posture. The data collected by the device can be analyzed in real-time and used to predict gestures for extended reality systems, such as AR or XR, and provided to medical domain experts for patient care and rehabilitation. This can be particularly useful for physical therapy and rehabilitation, where the device can be used to track progress and measure improvements in muscle activity and movement over time. The AR or XR can be included during the rehabilitation process by providing visual cues, feedback, and guidance in real-time, making the therapy more engaging and interactive.